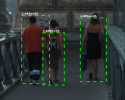

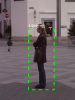

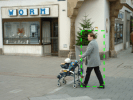

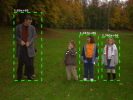

Pedestrian detection bias

This page contains a dataset for evaluating bias in pedestrian detection algorithms. Specifically, it contains age and gender labels for bounding boxes in the INRIA Person dataset (test set only).

The dataset is described in the following publication:

- M. Brandao, “Age and gender bias in pedestrian detection algorithms,” in Workshop on Fairness Accountability Transparency and Ethics in Computer Vision, CVPR, 2019.

You can download the data here: Pedestrian detection bias. Please cite the publication above if you use the dataset.